I love hyperbolic trigonometry. I always include it when I teach calculus, as I think it is important for students to see. Why?

- Many applications in the sciences use hyperbolic trigonometry; for example, the use of Laplace transforms in solving differential equations, various applications in physics, modeling population growth (the logistic model is a hyperbolic tangent curve);

- Hyperbolic trigonometric substitutions are, in many instances, easier than circular trigonometric substitutions, especially when a substitution involving

or

is involved;

- Students get to see another form of trigonometry, and compare the new form with the old;

- Hyperbolic trigonometry is fun.

OK, maybe that last reason is a bit of hyperbole (though not for me).

Not everyone thinks this way. I once had a colleague who told me she did not teach hyperbolic trigonometry because it wasn’t on the AP exam. What do you say to someone who says that? I dunno….

In any case, I want to introduce the subject here for you, and show you some interesting aspects of hyperbolic trigonometry. I’m going to stray from my habit of not discussing things you can find anywhere online, since in order to get to the better stuff, you need to know the basics. I’ll move fairly quickly through the introductory concepts, though.

The hyperbolic cosine and sine are defined by

I will admit that when I introduce this definition, I don’t have an accessible, simple motivation for doing so. I usually say we’ll learn a lot more as we work with these definitions, so if anyone has a good idea in this regard, I’d be interested to hear it.

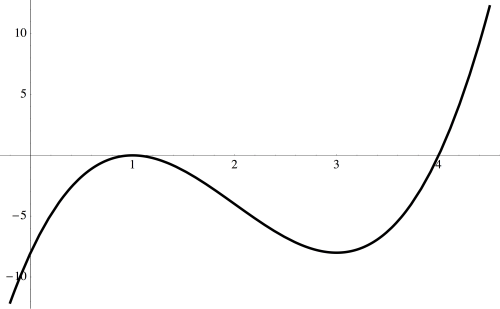

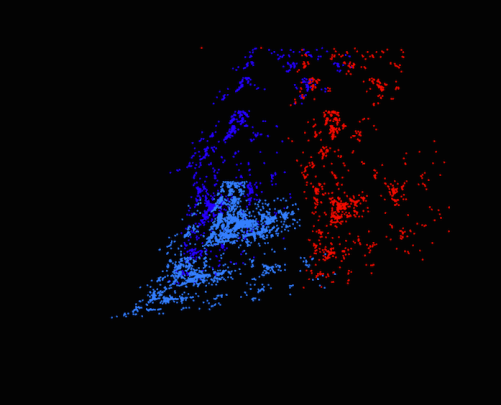

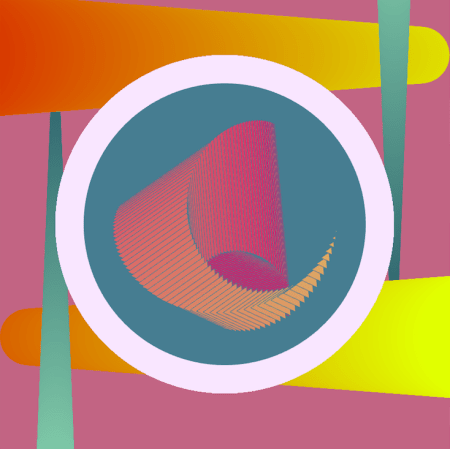

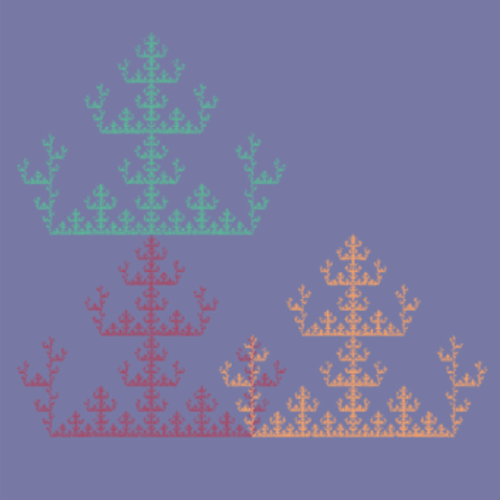

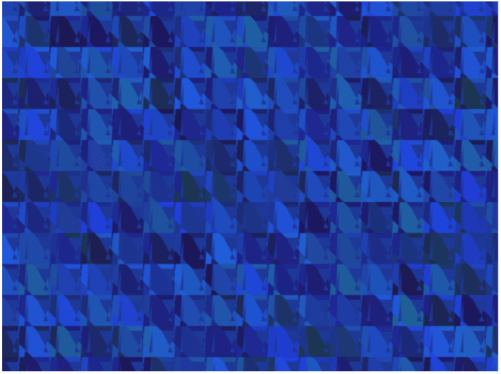

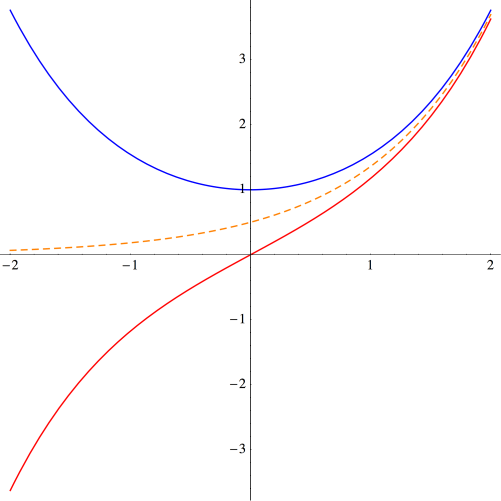

The graphs of these curves are shown below.

The graph of is shown in blue, and the graph of

is shown in red. The dashed orange graph is

which is easily seen to be asymptotic to both graphs.

Parallels to the circular trigonometric functions are already apparent: is an even function, just like

Similarly,

is odd, just like

Another parallel which is only slight less apparent is the fundamental relationship

Thus, lies on a unit hyperbola, much like

lies on a unit circle.

While there isn’t a simple parallel with circular trigonometry, there is an interesting way to characterize and

Recall that given any function

we may define

to be the even and odd parts of respectively. So we might simply say that

and

are the even and odd parts of

respectively.

There are also many properties of the hyperbolic trigonometric functions which are reminiscent of their circular counterparts. For example, we have

and

None of these are especially difficult to prove using the definitions. It turns out that while there are many similarities, there are subtle differences. For example,

That is, while some circular trigonometric formulas become hyperbolic just by changing to

and

to

sometimes changes of sign are necessary.

These changes of sign from circular formulas are typical when working with hyperbolic trigonometry. One particularly interesting place the change of sign arises is when considering differential equations, although given that I’m bringing hyperbolic trigonometry into a calculus class, I don’t emphasize this relationship. But recall that is the unique solution to the differential equation

Similarly, we see that is the unique solution to the differential equation

Again, the parallel is striking, and the difference subtle.

Of course it is straightforward to see from the definitions that and

Gone are the days of remembering signs when differentiating and integrating trigonometric functions! This is one feature of hyperbolic trigonometric functions which students always appreciate….

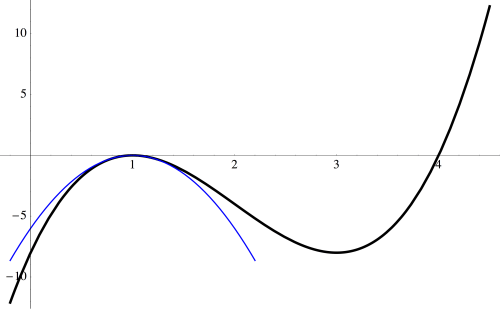

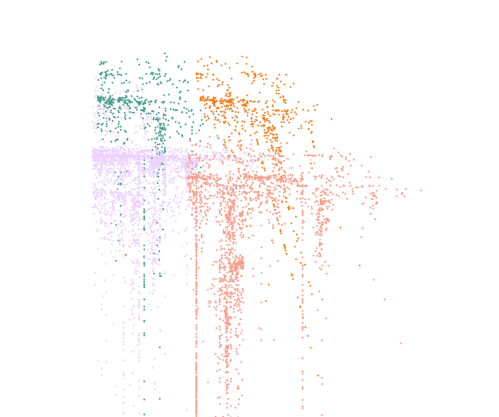

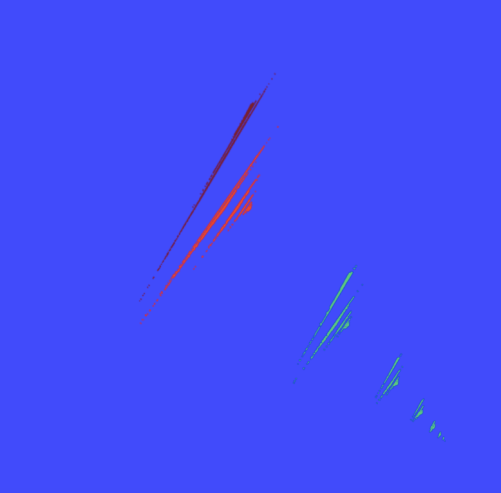

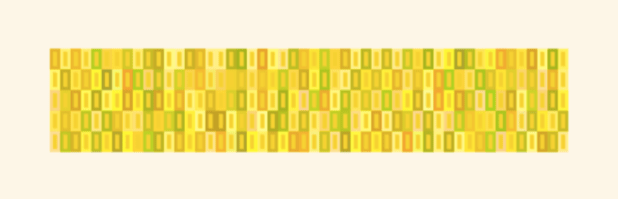

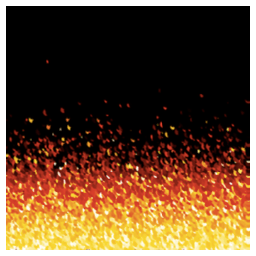

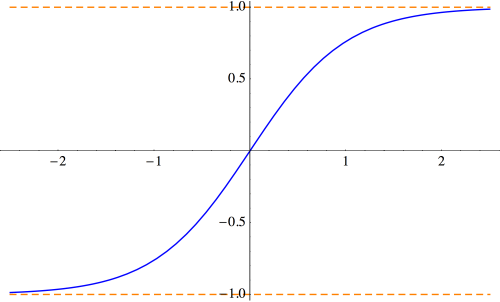

Another nice feature is how well-behaved the hyperbolic tangent is (as opposed to needing to consider vertical asymptotes in the case of ). Below is the graph of

The horizontal asymptotes are easily calculated from the definitions. This looks suspiciously like the curves obtained when modeling logistic growth in populations; that is, finding solutions to

In fact, these logistic curves are hyperbolic tangents, which we will address in more detail in a later post.

One of the most interesting things about hyperbolic trigonometric functions is that their inverses have closed formulas — in striking contrast to their circular counterparts. I usually have students work this out, either in class or as homework; the derivation is quite nice, so I’ll outline it here.

So let’s consider solving the equation for

Begin with the definition:

The critical observation is that this is actually a quadratic in

All that is necessary is to solve this quadratic equation to yield

and note that is always negative, so that we must choose the positive sign. Thus,

And this is just the beginning! At this stage, I also offer more thought-provoking questions like, “Which is larger, or

These get students working with the definitions and thinking about asymptotic behavior.

Next week, I’ll go into more depth about the calculus of hyperbolic trigonometric functions. Stay tuned!